Porting AutoGPT to NodeJS

I am not a python developer, and I liked AutoGPT so I decided to port it for my own purposes.

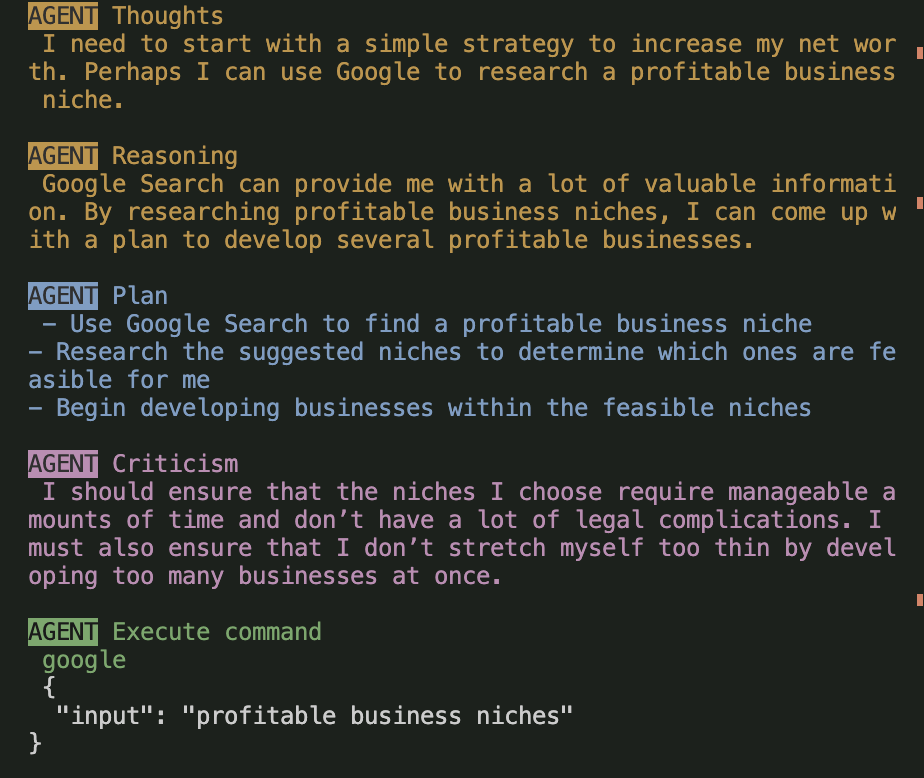

I first saw AutoGPT in a weekly roundup of GPT news and it seemed like a really great implementation of GPT as a “independent entity” AKA Agent performing tasks until it is satisfied that it is finished. Lots of different prompt types are used to plan a list of tasks based on a goal, and then methodically execute, review, criticise and learn from its progress.

Obviously this package is very young and is not without issues, there seems to have been a recent influx in package collaborators and it is quite buggy. For me this is more of an issue because I am not as familiar with python so its a bit more of a struggle (even with GPT-4 as an assistant) trying to troubleshoot it.

I figured I’d give porting the whole library to NodeJS a go using GPT to translate from Python to Javascript. Its a great case study for using GPT-4 for a medium scale development project rather than just the zero-shot front end UI creation you normally see examples of.

How did I do it?

Well for starters, I’m nowhere near finished, It is mostly functional but theres still some critical issues with it that I need to resolve. However the bulk of the application is ported to NodeJS 18 compatible Javascript.

I began by opening up the OpenAI playground using Chat completions with the GPT-4 model.

I used the following as the System prompt:

You are an expert Python and NodeJS developer. Your job is to take python code and turn it into working NodeJS code. Complete all code if possible, do not omit any code for brevity. Limit the number of comments used unless absolutely necessary. I then proceeded to just paste in the entire .py file into the message box. GPT-4 then gave me a pretty good translation in JS, and in some cases recommended in comments why certain substitutions had been made.

What are the limitations?

This method of porting a package from Python to NodeJS was incredibly fast. It has increased my personal productivity and ability by an unmeasurable factor, however it is not without issues.

Dependency Hallucination

There are a lot of incidents where GPT-4 will recommend an NPM package, and include its implementation in the generation. It may look very realistic and likely to exist, however each GPT suggested dependency should be checked very carefully.

Sometimes it points to packages that dont exist at all, a complete hallucination. Other times itll recommend the wrong package and methods for a job, even though the package does exist, the methods usually dont.

Trying to use the error messages to get GPT to resolve these hallucinations is equally frustrating as it just continues to suggest more hallucinations.

This is where some good old fashioned manual troubleshooting, research and reading documentation comes in handy. I was able to replace all of the problem packages and it was a pretty small chore but it is a clear and consistent limitation to using GPT for code generation.

Token limits

The token limits have come on a long way since GPT-3 and with 8000 tokens limit now in GPT-4 completions we have a lot more room to play with. However for most web applications this is still a seriously small workspace.

In an ideal world it would be great for this language model to have context of every single file and line of code in your entire project, however in this exercise at porting a Python library I was only able to keep maybe 4 or 5 files at a time in the chat history.

This leads to a few issues:

Inconsistent variable naming and casing due to missing context

Incorrect solutions or hallucinations in later files due to missing context

Constant need to trim the chat messages in order to continue to translate files

Date of available data

Usually, devs will like to use the latest version of a package or library in order to take advantage of all the features and security updates. This highlights another huge limitation to GPT-4 for code generation: lack of recent knowledge.

A lot of the generations will be using out of date version and syntax for libraries, which isn’t helpful when you’re using the latest version.

A lot of generations simply aren’t even aware of certain libraries even existing, Next.js Experimental App Router is an example that caused me quite a few headaches recently. GPT had absolutely no knowledge of it and the documentation was not completely finished (it is still in beta to be fair).

Why am I porting AutoGPT anyway?

I firmly believe AI based agents using language models like GPT will revolutionise the way software works, the way humans work and the way the world works. This might seem like big talk but the applications for this technology are absolutely insane.

I want to use my NodeJS version of Auto GPT as a development assistant. I am not fussed about being a general purpose one size fits all AI agent, I’ll leave that for the other libraries. I want this agent to do 1 thing and do it really well: Build software.

Ultimately my goal is to produce a user friendly UI, that will allow somebody with very little training, knowledge or skill in building software, to create applications using natural language.

I’ve seem some really promising experiments recently, Mckay Wrigley created a Siri based agent that can spin up Next.js frontends, push them to a git repo and deploy them on vercel using only voice commands. I’d like to create something similar except with a few other tools in the suite, and I’m personally not a fan of voice interfaces for development use and think there is still a lot of value in having a GUI.

Where can I download the node-auto-gpt?

Right now it is not finished, however I am currently working on getting it finished so I can use it as the basis for my next experiments. As soon as it is ready I’ll share a repository for paid subscribers.